Even if you’re not writing to different physical drives, you can get faster backups with Microsoft SQL Server when you back up to multiple files. Your exact numbers are going to vary based on your server.

Test round 1:

Backing up to multiple files on one volume

In this test, the backup files were on one of the local NVMe SSDs, but not the same volume that the database’s data & log files lived on.

- 1 backup file: 9.1 minutes

- 2 backup files: 7.9 minutes (13% faster)

- 4 backup files: 5.1 minutes (43% faster)

- 8 backup files: 6.2 minutes (32% faster)

This isn’t meant to be a definitive, “Always use 4 backup files” kind of post, but just a starting point as to how much quicker your backups can get with this one easy change. As with all of the metrics in this post, the idea is to get you started on the road of testing your backups for quick wins on large servers.

Test round 2:

Backing up to files on different volumes

In this round, each backup file was on its own local NVMe SSD – until we got to 8 files, since I’ve only got 4 extra NVMe drives on the i3en.metal:

- 1 backup file: 9.1 minutes

- 2 files, 2 volumes: 6.9 minutes (24% faster)

- 4 files, 4 volumes: 4.3 minutes (53% faster)

- 8 files, 4 volumes: 3.0 minutes (67% faster)

Spreading the load over different backup volumes gave me double the speed improvements that I got when writing to a single volume. In this impractical example, I’m using local NVMe, and your server probably isn’t going to have that. However, you can get similar results by writing your backup files to different storage targets that have their own individual throughput that isn’t collectively throttled.

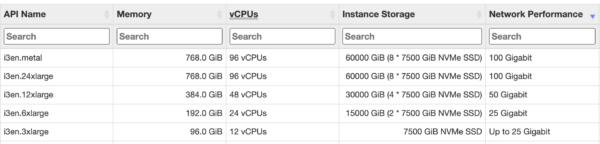

In the prairie days when your grandmother backed up her SQL Server, she hesitated to write her backups over the network because her network consisted of two tin cans connected with string. Today, thanks to the simple ec2instances.info, you can see the network bandwidth available to your instance type, and it ain’t no string:

This is why I love the i3 series: tons of local solid state, plus great network throughput. The network bandwidth alone isn’t enough, of course: you also have to provision fast storage targets on the other side if you need to back up a multi-terabyte backup. Some shops use i3en servers as staging area file servers – landing their backups there to get ’em written quickly, and then migrating the backups to more cost-effective and redundant storage for the longer term. (There are more cost-effective instance types if your backup needs are smaller, of course.)

Test round 3:

Backing up to NUL:

When you’re doing backup speed testing, you can back up to DISK=’NUL:’ and SQL Server doesn’t actually write the backup file to disk – it just discards the data. This helps measure how fast SQL Server’s backup processes can read the data from disk and compress it on this particular server.

- 1 NUL file: 9.4 minutes

- 2 NUL files: 6.8 minutes (28% faster)

- 4 NUL files: 4.3 minutes (54% faster)

- 8 NUL files: 2.9 minutes (70% faster)

The numbers here are useful in comparison to test 2’s numbers: when writing to very fast backup volumes, you can actually approach the speed of simply discarding the data! In the 8-file example, if I just throw the data away by backing up to NUL, I finished in 2.9 minutes. Actually writing the data out to local NVMe SSDs got me there in 3.0 minutes. I’ll take it.

Comments